Below you can find a list of my publications, ordered chronologically.

|

Disproving Program Equivalence with LLMs. Miltiadis Allamanis, Pengcheng Yin. 2025. TLDR: Disprove program equality in a white-box, execution feedback-driven way with LLMs. |

|

Deep End-to-end Causal Inference. Tomas Geffner, Javier Antoran, Adam Foster, Wenbo Gong, Chao Ma, Emre Kiciman, Amit Sharma, Angus Lamb, Martin Kukla, Nick Pawlowski, Miltiadis Allamanis, Cheng Zhang. TMLR 2024. TLDR: Causal Discovery and Inference End-to-End. |

|

Do Large Code Models Understand Programming Concepts? A Black-box Approach. Ashish Hooda, Mihai Christodorescu, Miltos Allamanis, Aaron Wilson, Kassem Fawaz, Somesh Jha. ICML 2024. TLDR: Perturb the code, check LLM robustness. |

|

NExT: Teaching Large Language Models to Reason about Code Execution. Ansong Ni, Miltiadis Allamanis, Arman Cohan, Yinlin Deng, Kensen Shi, Charles Sutton, Pengcheng Yin. ICML 2024. TLDR: Teach an LLM to fix code through (useful) rationales about the code and its execution. |

|

Unsupervised Evaluation of Code LLMs with Round-Trip Correctness. Miltiadis Allamanis, Sheena Panthaplackel, Pengcheng Yin. ICML 2024. TLDR: Check if a model can perform a roundtrip. If it does, that's good. |

|

Epicure: Distilling Sequence Model Predictions into Patterns. Miltiadis Allamanis, Earl T. Barr. 2023. TLDR: Distill model predictions into interpretable patterns that can be used for anomaly detection. |

|

CoRGi: Content-Rich Graph Neural Networks with Attention. Jooyeon Kim, Angus Lamb, Simon Woodhead, Simon Peyton Jones, Cheng Zheng, Miltiadis Allamanis. KDD 2022. TLDR: A method to embed content information within nodes in a GNN. Evaluated on imputation scenarios. |

|

AdaptivePaste: Code Adaptation through Learning Semantics-aware Variable Usage Representations. Xiaoyu Liu, Jinu Jang, Neel Sundaresan, Miltiadis Allamanis, Alexey Svyatkovskiy. 2022. TLDR: Learn to adapt pasted snippets within some code context using transformers. |

|

HEAT: Hyperedge Attention Networks. Dobrik Georgiev, Marc Brockschmidt, Miltiadis Allamanis. TMLR 2022. TLDR: Generalize transformers and GNN to typed and qualified hypergraphs. |

|

JEMMA: An Extensible Java Dataset for ML4Code Applications. Anjan Karmakar, Miltiadis Allamanis, Romain Robbes. EMSE 2022. TLDR: A large Java dataset with compile-time metadata. |

|

Learning to Complete Code with Sketches. Daya Guo, Alexey Svyatkovskiy, Jian Yin, Nan Duan, Marc Brockschmidt, Miltiadis Allamanis. ICLR 2022. TLDR: Automatically generate (code) sketches, placing holes where ambiguity prevents us predicting terminal tokens. |

|

NS3: Neuro-Symbolic Semantic Code Search. Shushan Arakelyan, Anna Hakhverdyan, Miltiadis Allamanis, Christophe Hauser, Luis Garcia, Xiang Ren. 2022. TLDR: The natural language query is parsed and its structure instantiates neural modules that break down the search problem. |

|

Overwatch: Learning Patterns in Code Edit Sequences. Yuhao Zhang, Yasharth Bajpai, Priyanshu Gupta, Ameya Ketkar, Miltiadis Allamanis, Titus Barik, Sumit Gulwani, Arjun Radhakrishna, Mohammad Raza, Gustavo Soares, Ashish Tiwari. 2022. TLDR: Synthesize edit templates from edit sequences. |

|

Simultaneous Missing Value Imputation and Causal Discovery with Groups. Pablo Morales-Alvarez, Angus Lamb, Simon Woodhead, Simon Peyton Jones, Miltiadis Allamanis, Cheng Zhang. NeurIPS 2022. TLDR: Causal discovery and missing value imputation via GNNs. |

|

Copy that! Editing Sequences by Copying Spans. Sheena Panthaplackel, Miltiadis Allamanis, Marc Brockschmidt. AAAI 2021. TLDR: Learn seq2seq models that can edit sequence by copying long spans. |

|

Fast and Memory-Efficient Neural Code Completion. A. Svyatkovskiy, S. Lee, A. Hadjitofi, M. Riechert, J. Franco, M. Allamanis. Mining Software Repositories 2021. TLDR: Lightweight yet accurate code completion use neural reranking models. |

|

Graph Neural Networks on Program Analysis. M. Allamanis. Graph Neural Networks: Foundations, Frontiers, and Applications 2021. TLDR: A survey of GNNs for learned program analyses |

|

Self-Supervised Bug Detection and Repair. M. Allamanis, H. Jackson-Flux, M. Brockschmidt. NeurIPS 2021. TLDR: Learn to detect a variety of bugs in source code by asking two models to play a hide-and-seek game: one model inserts a bug, the other tries to find it. |

|

CODIT: Code Editing with Tree-Based Neural Models. S. Chakraborty, M. Allamanis, B. Ray. TSE 2020. TLDR: Model code edits with tree-to-tree neural networks. |

|

Flexeme: Untangling Commits using Lexical Flows. Profir-Petru Pârțachi, Santanu Kumar Dash, Miltiadis Allamanis, Earl T. Barr. FSE 2020. |

|

Typilus: Neural Type Hints. M. Allamanis, E. T. Barr, S. Ducousso, Z. Gao. PLDI 2020. TLDR: Use meta-learning to predict Python type annotations, including rare ones. |

|

The Adverse Effects of Code Duplication in Machine Learning Models of Code. M. Allamanis. SPLASH Onward! 2019. TLDR: Automatically scraped code corpora commonly have many duplicates and evaluations are serverly affected by them. |

|

CodeSearchNet Challenge: Evaluating the State of Semantic Code Search. H. Husain, H. Wu, T. Gazit, M. Allamanis, M. Brockschmidt. 2019. TLDR: A benchmark for natural language code search using human-provided annotations. |

|

Generative Code Modeling with Graphs. M. Brockschmidt, M. Allamanis, A. L. Gaunt, O. Polozov. ICLR 2019. TLDR: Generate code expressions using asynchronous GNNs. |

|

Learning units-of-measure from scientific code. M. Danish, M. Allamanis, M. Brockschmidt, A. Rice, D. Orchard. SE 4 Science Workshop 2019. |

|

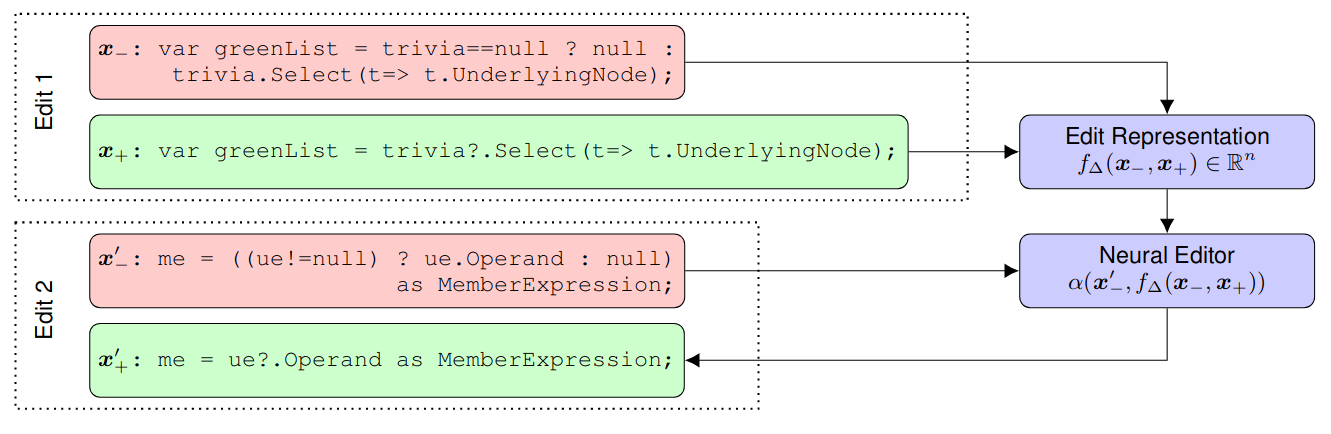

Learning to Represent Edits. P. Yin, G. Neubig, M. Allamanis, M. Brockschmidt, A. L. Gaunt. ICLR 2019. TLDR: How can we represent edits in neural networks? |

|

A Neural Approach to Decompiled Identifier Renaming. J. Lacomis, P. Yin, E.J. Schwartz, M. Allamanis, C. Le Goues, G. Neubig, B. Vasilescu. ASE 2019. |

|

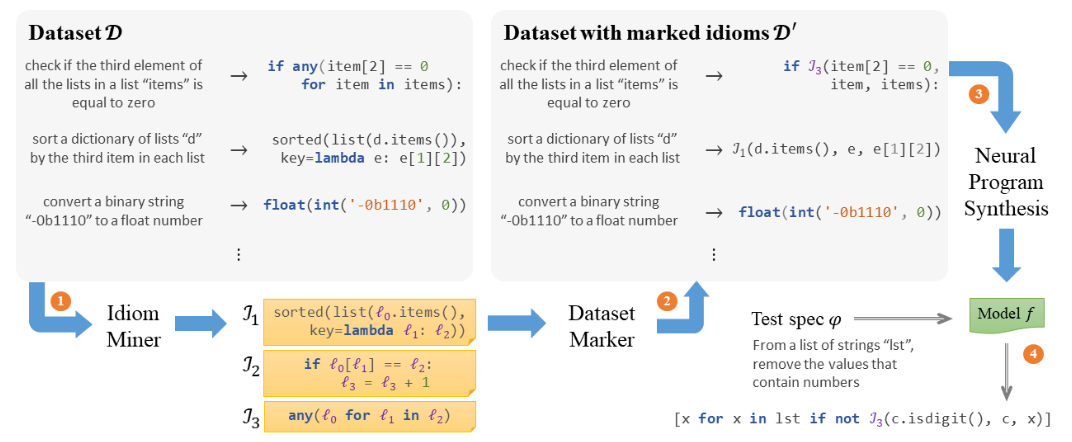

Program Synthesis and Semantic Parsing with Learned Code Idioms. R. Shin, M. Allamanis, M. Brockschmidt, O. Polozov. NeurIPS 2019. TLDR: Use code idioms to improve program synthesis and semantic parsing. |

|

Structured Neural Summarization. P. Fernandes, M. Allamanis, M. Brockschmidt. ICLR 2019. TLDR: A graph-to-sequence model for improved summarization of code and text. |

|

Constrained Graph Variational Autoencoders for Molecule Design. Q. Liu, M. Allamanis, M. Brockschmidt, A. L. Gaunt. NIPS 2018. TLDR: VAEs for graph encoding and generation. |

|

Deep Learning Type Inference. V. Hellendoorn, C. Bird, E. T. Barr, M. Allamanis. FSE 2018. TLDR: Sequence-based model to predict type annotations. |

|

Learning to Represent Programs with Graphs. M. Allamanis, M. Brockscmidt, M. Khademi. ICLR 2018. TLDR: Represent programs as graphs and use GNNs to find bugs. |

|

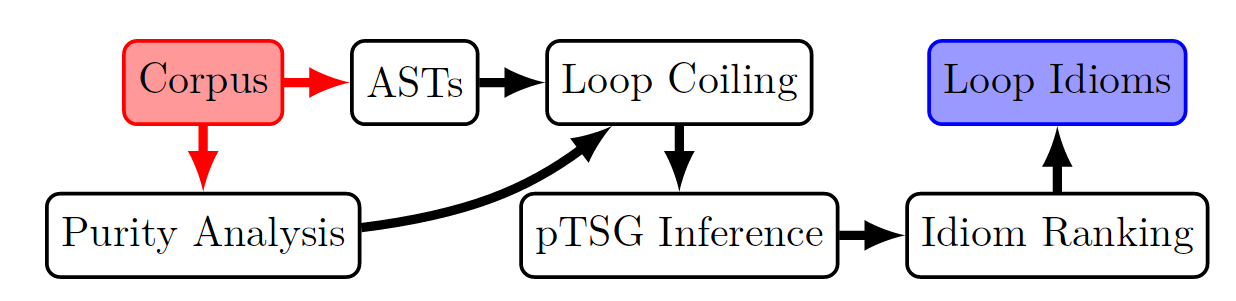

Mining Semantic Loop Idioms from Big Code. M. Allamanis, E. T. Barr, C. Bird, M. Marron, C. Sutton. IEEE Transactions in Software Engineering 2018. |

|

RefiNym: Using Names to Refine Types. S. Dash, M. Allamanis, E. T. Barr. FSE 2018. TLDR: Automatically refine types, such as strings, respecting type constraints by using data flow and identifier names. |

|

A Survey of Machine Learning for Big Code and Naturalness. M. Allamanis, E. T. Barr, P. Devanbu, C. Sutton. ACM Computing Surveys 2018. |

|

Autofolding for Source Code Summarization. J. Fowkes, P. Chanthirasegaran, R. Ranca, M. Allamanis, M. Lapata, C. Sutton. IEEE Transactions on Software Engineering 2017. |

|

Learning Natural Coding Conventions. M. Allamanis. PhD Dissertation 2017. |

|

Learning Continuous Semantic Representations of Symbolic Expressions. M. Allamanis, P. Chanthirasegaran, P. Kohli, C. Sutton. ICML 2017. TLDR: Can we learn models that distiguish syntax from semantics of a math expression? |

|

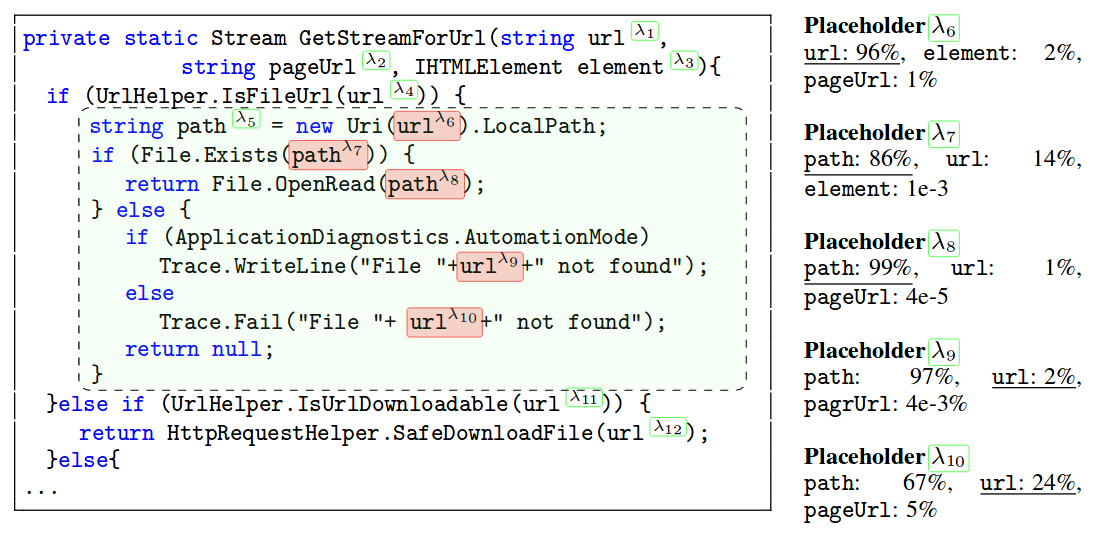

SmartPaste: Learning to Adapt Source Code. M. Allamanis, M. Brockscmidt. 2017. TLDR: Learn to adapt a snippet into new contexts. |

|

A Convolutional Attention Network for Extreme Summarization of Source Code. M. Allamanis, H. Peng, C. Sutton. ICML 2016. TLDR: A 1D-CNN-to-sequence model to summarize source code. |

|

A Bimodal Modelling of Source Code and Natural Language. M. Allamanis, D. Tarlow, A. D. Gordon, Y. Wei. ICML 2015. |

|

Suggesting Accurate Method and Class Names. M. Allamanis, E. T. Barr, C. Bird, C. Sutton. FSE 2015. |

|

Learning Natural Coding Conventions. M. Allamanis, E. T. Barr, C. Bird, C. Sutton. FSE 2014. TLDR: Coding conventions can be learned and suggested to developers. |

|

Mining Idioms from Source Code. M. Allamanis, C. Sutton. FSE 2014. TLDR: Mine interesting syntactic patterns in code. |

|

Mining Source Code Repositories at Massive Scale Using Language Modeling . M. Allamanis, C. Sutton. MSR 2013. |

|

Why, When, and What: Analyzing Stack Overflow Questions by Topic, Type, and Code. M. Allamanis, C. Sutton. MSR 2013. |

|

Evolution of a Location-based Online Social Network: Analysis and Models. M. Allamanis, S. Scellato, C. Mascolo. IMC 2012. |